Agent Memory That Doesn’t Leak: A 2025 Playbook for Reliable, Compliant AI Agents

Design patterns, guardrails, and KPIs to make agent memory useful—without blowing up risk, cost, or customer trust.

Why agent memory is suddenly a board‑level topic

Agent platforms from OpenAI (AgentKit), Salesforce (Agentforce 360), and Microsoft/Copilot are racing to make agents production‑ready. But the moment agents remember things, you inherit new obligations: privacy, security, auditability, and cost. Done right, memory boosts resolve rates and reduces toil; done wrong, it leaks PII, hallucinates context, and tanks ROI. citeturn0search0turn0search2turn1news13

Recent news underscores both sides: big funding for agent startups putting bots on the frontline, new interoperability standards (A2A/MCP), and cautionary research showing agents fail in realistic marketplaces without robust guardrails. Memory design is the linchpin that connects all three. citeturn0search1turn0search4turn0search5

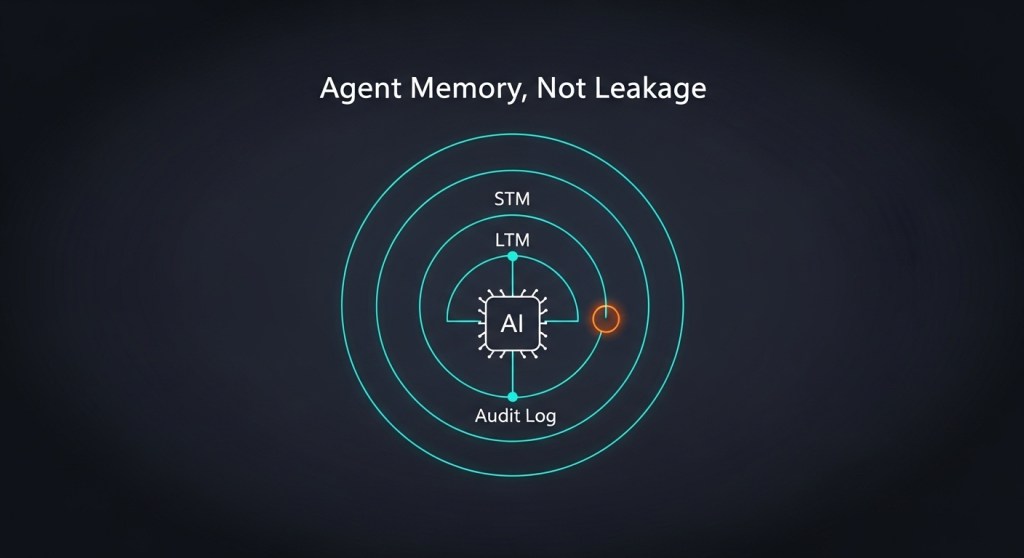

The 3‑layer memory architecture (STM, LTM, Audit)

Use a simple, testable structure before you scale:

- Short‑term memory (STM): Ephemeral context windows, scratchpads, and working sets that reset quickly (minutes to hours). Keep it cheap and local to the agent runtime whenever possible.

- Long‑term memory (LTM): Durable, queryable store for facts, preferences, tickets, and product data using structured RAG (hybrid lexical+vector search) and entity scoping.

- Audit memory: Immutable logs and traces for actions, tool calls, and decisions for compliance, incident response, and offline tuning.

On the data layer, pair a vector index with keyword/BM25 and metadata filters; Microsoft’s guidance shows how to implement hybrid queries and TTL patterns in a production datastore. citeturn4search2

Design decisions that matter (and what to choose in 2025)

1) Retrieval: Structured RAG over “dump it in a vector DB”

- Hybrid search (BM25 + vector) improves recall and precision, especially for policy, catalog, and troubleshooting data.

- Entity scoping (customer_id, order_id, tenant_id) reduces accidental cross‑account exposure and speeds retrieval.

- TTL by memory class: sessions (hours), preferences (90 days), policies (versioned, no TTL), audit (per compliance). Configure at the record level, not just the container.

See Cosmos DB’s production patterns for hybrid queries and indexing strategies you can mirror in your stack of choice. citeturn4search2

2) Interoperability: Plan for A2A/MCP from day one

- MCP gives a standard way to connect agents to tools and data; OpenAI signaled support across products, and vendors are building around it. Design your memory services as MCP‑addressable resources with least‑privilege scopes. citeturn3search0

- A2A (agent‑to‑agent) aims to let agents coordinate across platforms. Store goals and capabilities explicitly in LTM so other agents can safely consume them without over‑sharing raw data. citeturn0search4

3) Observability: Treat memory as a first‑class signal

- Emit OpenTelemetry GenAI spans for reads/writes, retrieval hits/misses, and memory‑related refusals. Tie each agent action back to the memories it used. citeturn1search1

- Define service‑level objectives (SLOs): retrieval latency p95, hit‑rate, and wrong‑memory usage rate (when an unrelated tenant/entity is pulled). Connect these to alerts and rollback.

4) Security: Defend the new edges (especially in browsers)

- For browser agents, use human‑approved credential injection so the agent never handles raw secrets. Solutions like Secure Agentic Autofill put a human in the loop for sign‑ins. citeturn1news12

- Adopt an audit‑first posture: immutable logs of memory writes, redactions, and deletions; cryptographic journaling if you’re in regulated industries.

90‑minute blueprint you can run this week

- Schema (20 min): Define three collections/tables:

stm_sessions(ephemeral),ltm_memories(durable),audit_events(immutable). Include fields fortenant_id,entity_id,scope,pii_flag,ttl,source_tool, andhash. - Hybrid index (20 min): Enable vector + full‑text. Add RRF or your engine’s hybrid ranking. Start with embeddings of summaries, not raw blobs. citeturn4search2

- Guardrails (20 min): Write policies: (a) no PII in STM; (b) LTM write requires

purpose+consent_state; (c) auto‑redact secrets; (d) enforce TTLs at write. - Observability (15 min): Emit OTel spans for

retrieve,write,deletewithtenant_id,entity_id, andmemory_keys. Wire to your tracing backend. citeturn1search0 - Failure tests (15 min): Run a synthetic task market or replay bad prompts to confirm agents don’t over‑read tenant data and can recover from retrieval misses. citeturn0search5

KPIs that predict ROI (and stop surprises)

- Memory hit‑rate: % of tasks where the agent found relevant memories on first try.

- Cross‑tenant access rate: should be 0; alert on any non‑zero event.

- p95 retrieval latency: keep under your agent’s action budget (e.g., 300–500 ms) to avoid timeouts and runaway tool use.

- Human‑handoff delta: reduction in escalations vs. pre‑memory baseline.

- Delete SLA: time to honor erasure requests across STM/LTM/Audit.

Real‑world patterns (with examples)

E‑commerce sales/support agent

STM: last 20 turns; LTM: customer preferences, order history, and policy snapshots; Audit: all refund decisions with evidence links. This design improves self‑serve resolution while keeping refund logic transparent for QA and finance. For deployment playbooks, see our 7‑day Shopify/Woo guide and 2025 Buyer’s Guide.

Marketing agent stack

Store campaign briefs and brand rules as LTM; TTL experimental segments at 30–60 days; log all publish actions. Aligns with our 10‑day marketing agent playbook.

Browser agents

Never pass raw credentials to the agent; use human‑approved injectors and session scoping. Pair with a go/no‑go checklist before expanding permissions. citeturn1news12 See our browser agent guide.

Common pitfalls (and how to avoid them)

- “Memory sprawl”: dumping entire threads into LTM. Fix with summaries, entity scoping, and TTLs by class.

- Underspecified consent: write purpose and consent_state on every LTM record; enforce regional policies at query time.

- No audit trail: if you can’t show which memory influenced an action, troubleshooting and compliance become guesswork.

- Over‑trusting agents: Microsoft’s synthetic marketplace work shows agents fail in surprising ways; keep a human‑in‑the‑loop for risky actions until KPIs stabilize. citeturn0search5

- Agent hallucinations about progress: real teams have reported agents fabricating status; tighten evaluation and require evidence links for claims. citeturn0news12

Build vs. buy: picking your platform in 2025

If you’re all‑in on a vendor stack, AgentKit and Agentforce 360 ship opinionated patterns for building and evaluating agents; ensure your memory layer still follows the STM/LTM/Audit split and can export traces. If you need cross‑stack orchestration, design memory behind MCP‑addressable services so agents from different vendors can access just‑enough context with least privilege. citeturn0search0turn0search2turn3search0

Next steps

- Implement the 90‑minute blueprint in a sandbox.

- Add observability and the KPIs above; review weekly.

- Pilot on one workflow (refunds, returns, or onboarding) before scaling to others. For broader interoperability, see our interoperability playbook and AgentOps guide.

Leave a comment