Quick plan (what you’ll get)

- Scan competitors for AI‑agent trends and standards.

- Clarify audience and the deployment problems we solve.

- Map content gaps vs. our recent governance/ROI guides.

- Choose a timely, high‑intent topic (agent observability).

- Do light SEO/SERP research and outline unique value.

- Deliver an actionable, standards‑based playbook with examples.

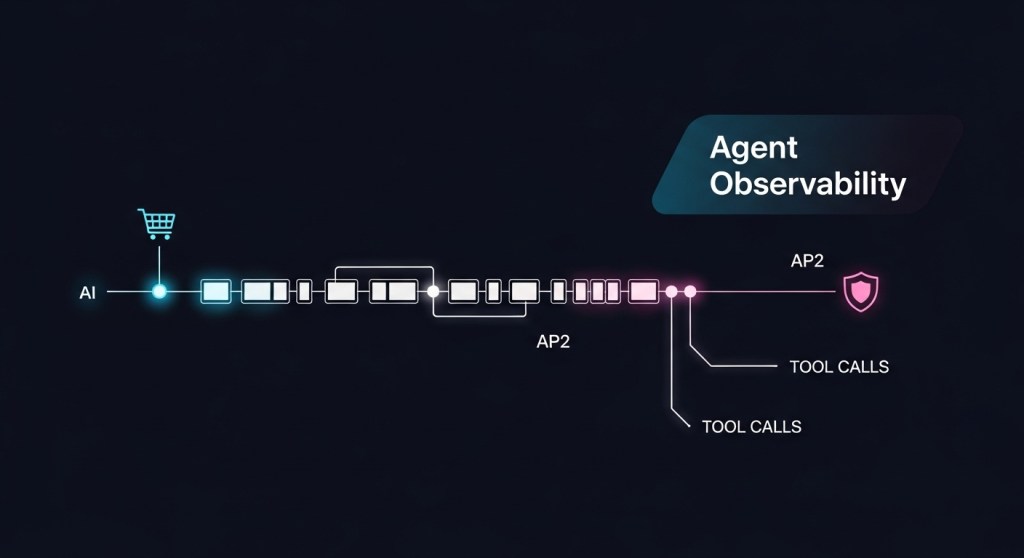

Agent Observability in 2025: A Practical Blueprint to Trace, Evaluate, and Govern MCP/AP2‑Enabled AI Agents

Who this is for: founders, e‑commerce operators, and engineering leaders rolling out AI agents for support, checkout, and back‑office workflows.

The problem: agents now browse, buy, and trigger workflows—but most teams still lack observability: consistent traces, KPIs, and incident response. Without it, you can’t prove ROI, pass audits, or fix failures quickly.

What’s changed lately: OpenTelemetry’s GenAI conventions are maturing; Microsoft’s Agent Framework ships first‑class OTEL hooks; and the industry is coalescing around Model Context Protocol (MCP) for tool access and Agent Payments Protocol (AP2) for agent‑led purchases. Together, these make production‑grade observability both possible and urgent. citeturn3search3turn3search0turn3search2turn4search0turn4search1

Why observability is non‑negotiable for agents now

- Risk & security: recent warnings highlight impersonation and misuse risks, and researchers are publishing MCP‑specific attack benchmarks—hard to mitigate without traces and approvals. citeturn0news13turn1academia19

- Real threats: AI‑orchestrated campaigns show why you need forensics‑ready audit trails and anomaly detection. citeturn0news12

- Commerce: as AP2 ushers in agent‑driven purchases, you’ll need consistent IDs and event traces to reconcile mandates, outcomes, refunds, and chargebacks. citeturn4search1

The 7‑step Agent Observability Blueprint

1) Define outcomes, SLOs, and guardrails

Pick 5–8 KPIs that tie directly to business value and safety. For e‑commerce support and checkout agents:

- Success rate (task completion) and First Attempt Pass

- Mean time to resolution (MTTR) and loop rate (stuck reasoning)

- Tool error rate and tool latency (P95)

- Cost per resolved task and revenue per agent hour

- AP2 events: mandate approvals, declines, disputes

Map each KPI to an alert or review policy (e.g., human approval for high‑risk actions, daily safety review report). Tie these to your 2025 Agent Governance Checklist.

2) Instrument agents and tools with OpenTelemetry (OTEL)

Use the GenAI semantic conventions to emit traces, metrics, and logs for: prompts, responses, tool calls, and model metadata. Microsoft’s Agent Framework includes built‑in OTEL, making this fast to enable. Example (C#):

// Pseudocode: instrument agent and export traces

var tracer = Otel.Setup("StorefrontAgents");

var chatClient = new AzureOpenAIClient(endpoint, cred)

.AsIChatClient().AsBuilder()

.UseOpenTelemetry(sourceName: "StorefrontAgents", configure: c => c.EnableSensitiveData=false)

.Build();

var agent = new ChatClientAgent(chatClient, name: "CheckoutAgent")

.WithOpenTelemetry(sourceName: "StorefrontAgents");

Start with sensitive data off in production; enable it only in test or under incident response. citeturn3search3turn3search0turn3search2

3) Normalize tool usage via MCP

Wrap internal APIs as MCP servers and record each invocation as a span with attributes like mcp.tool.name, tool.version, request.hash, response.hash, latency_ms, and approval.required. This standardizes telemetry across platforms (Claude, ChatGPT, Gemini, etc.) and improves portability. citeturn4search0turn4news12

4) Capture commerce trails with AP2

When agents transact, attach AP2 IDs to your spans: ap2.mandate_id, ap2.payment_id, ap2.status, ap2.provider. Store these alongside the agent’s plan, tools, and approvals so finance, risk, and support can reconcile outcomes quickly. citeturn4search1

5) Add evals on traces—not just prompts

Move beyond unit prompts. Run nightly evals that grade traces: action sequences, tool correctness, and safety gates. Use emerging resources (e.g., OpenAI’s eval push, community demos) and research like MSB for MCP‑specific threats. citeturn2search0turn3search4turn1academia19

6) Dashboards and alerts your execs will use

- Ops: task success rate, loop rate, tool errors by endpoint, P95 latency

- Finance: cost per task, AP2 approvals/declines, refund rate

- Risk: impersonation flags, prompt‑injection detections, privileged‑tool usage

Alert when loop rate spikes or when a privileged tool is used without an approval span.

7) Incident response for agents (90‑minute drill)

- Freeze the agent version; route risky tasks to human fallback.

- Collect the full trace (prompts, tools, AP2 events) and session replay.

- Classify failure via a taxonomy (environment vs. agent) to speed fix paths. citeturn3academia12

- Patch prompts, permissions, or tool contracts; add a regression test.

- Approve & ship via your governance controls.

Example: tracing an agentic refund

Scenario: a support agent handles “Where’s my order?” and issues a refund if shipment is lost.

- Plan span: intent = refund‑eligibility; risk_tier = medium.

- Tool span (MCP): order.lookup → success (220 ms).

- Policy span: auto‑approve < $50; else escalate.

- AP2 span: mandate_id=MDT‑123; status=approved.

- Outcome span: refund_issued=true; email_sent=true.

From this single trace, CX can audit the decision, Finance can reconcile payouts, and Security can verify approvals.

Security notes you should not skip

- Impersonation & over‑permissioning remain top risks—treat identity like code, with approvals logged as spans. citeturn0news13

- MCP risks are being actively studied (maintainability, tool poisoning, name‑collision, and injection). Build detections and allow‑lists into your tool registry. citeturn1academia18turn1academia19

- Threat reality: AI is showing up in offensive campaigns; design for forensics from day one. citeturn0news12

14‑day rollout plan (works for most teams)

- Days 1–2: Pick KPIs and wireframe dashboards; decide sensitive‑data policy.

- Days 3–5: Enable OTEL on one agent + two MCP tools; ship basic spans. citeturn3search0turn3search2

- Days 6–8: Add AP2 span attributes in sandbox; validate mandate → payment links. citeturn4search1

- Days 9–11: Stand up trace‑based evals; add loop‑rate + privileged‑tool alerts. citeturn3search4

- Days 12–14: Run a live pilot; review incidents via our governance checklist; green‑light next agent.

How this fits with your existing stack

- Agent platforms: Whether you’re on OpenAI AgentKit, Salesforce Agentforce 360, Amazon Nova Act, or Google Mariner, you can still emit OTEL spans and normalize tools via MCP for cross‑vendor visibility. citeturn0search0turn0search4turn0search2turn0search5

- System of Record: Pipe traces and approvals into your Agent System of Record for compliance and lifecycle management.

- Holiday readiness: Pair this blueprint with our Agentic Checkout in Holiday 2025 plan to track conversion, approvals, and failures end‑to‑end.

What competitors cover—and what they miss

News cycles (funding, launches) rarely explain how to operationalize evals, AP2 audit trails, and MCP span design. This guide bridges that gap so you can ship agents that are fast, safe, and measurable.

Further reading

- OpenTelemetry on AI agent semantic conventions. citeturn3search3

- Microsoft Agent Framework observability docs (C# and Python). citeturn3search0turn3search2

- Anthropic’s MCP intro + The Verge coverage. citeturn4search0turn4news12

- Google’s AP2 announcement for agent‑driven purchases. citeturn4search1

- MCP security/maintainability studies and benchmarks. citeturn1academia18turn1academia19turn1academia15

Call to action

Want a done‑for‑you rollout? HireNinja can instrument your agents with OTEL, wire up MCP/AP2 audit trails, and stand up dashboards in two weeks. Subscribe for more playbooks or talk to our team to start a pilot.

Leave a comment