Quick plan for this guide

- Scan competitors’ coverage to confirm what’s new in agent platforms and management layers.

- Define buyer intent (founders, e‑commerce operators, and tech leads evaluating platforms for 2026).

- Map must‑have requirements: interoperability (MCP/A2A), observability (OpenTelemetry), governance, and FinOps.

- Build a scored RFP checklist you can copy into procurement docs.

- Link to deeper how‑to posts for implementation: registry, CI/CD, firewall, reliability, and spend.

Why this RFP now

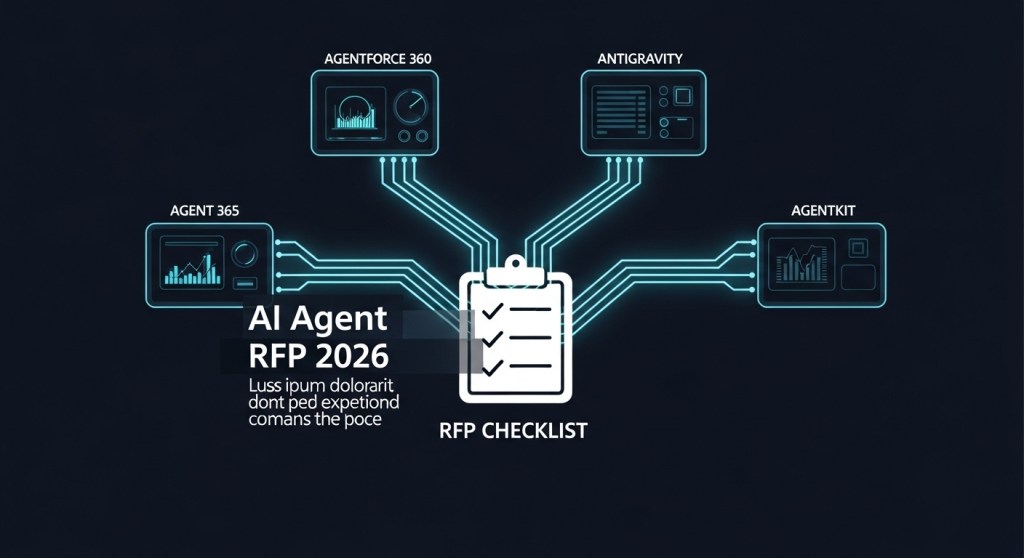

In the last 72 hours, Microsoft announced Agent 365—a control surface to manage a growing bot workforce—now in early access. citeturn1news12turn5news12 Google introduced Antigravity alongside Gemini 3, an agent‑first coding and orchestration environment. citeturn4news12turn4news13 OpenAI launched AgentKit for building and shipping agents at Dev Day. citeturn0search0 And earlier this year, Microsoft adopted Google’s A2A interoperability standard so agents can collaborate across clouds. citeturn0search1

Regulatory timing also matters. Official EU pages still show broad applicability dates in August 2026 with staged exceptions, while a November 19, 2025 update signaled delays for some high‑risk provisions to late 2027. Build your plan assuming staggered obligations by system type and geography. citeturn2search0turn2search1turn2news12

How to use this checklist

This RFP framework helps you compare Microsoft Agent 365, Salesforce Agentforce 360, Google Antigravity (Gemini 3), OpenAI AgentKit—and any other agent platform—on the capabilities that actually lower risk and drive ROI.

Category A — Interoperability and ecosystem

- MCP support: Does the platform support Model Context Protocol (client/servers/registry), or provide adapters? citeturn3search2turn3search5

- A2A: Can agents federate tasks with external agents via A2A or equivalent? citeturn0search1

- Connectors: Native connectors to CRM, commerce, support, data warehouses, search, and calendars?

- Bring‑your‑own‑model: Choice of models (Gemini, Claude, OpenAI, local NIMs) without lock‑in? citeturn4search3turn4search2

- Marketplace/registry: Is there a trusted agent/connector registry with signed metadata?

Category B — Observability and reliability

- OpenTelemetry: First‑class traces, spans, and logs for agent steps; emerging semantic conventions for agents. citeturn3search4

- Evals: Built‑in evals for tasks, step grading, and regression gates (particularly for AgentKit). citeturn0search0

- SLOs: Error budgets and SLO dashboards for task success, latency, and hallucination rate.

- Shadow/canary: Support for shadow trials and canary releases for agent flows.

Category C — Security and governance

- Policy engine: OPA or equivalent for tool permissions, data boundaries, and approvals.

- Identity: Agent identities, least‑privilege access (e.g., Entra, SCIM), and secrets management.

- Auditability: Tamper‑evident logs of tool calls with inputs/outputs and human approvals.

- Standards: ISO/IEC 42001 alignment and AI impact assessments (ISO/IEC 42005). citeturn2search6turn2search5

- Regulatory mapping: EU AI Act class mapping; plan for phased obligations in 2025–2027. citeturn2search0turn2search1turn2news12

Category D — Cost control (FinOps)

- Per‑job cost: Trace‑level cost/tokens by step and by tool.

- Budget guardrails: Limits by user, team, environment; kill switches.

- Dynamic routing: Route to cheaper/faster models based on SLOs.

Category E — Productivity fit

- Agent management: Central admin for agent registry, policies, and health (Agent 365 focus). citeturn1news12

- Embedded flows: Work where teams live (Slack, Gmail, Docs, Sheets, CRM). citeturn4search0

- Voice/telephony: Native voice agents and call analytics when needed. citeturn0search6

60‑point RFP checklist (copy/paste)

Score each item 0–2 (0 = missing, 1 = partial, 2 = strong). Weight categories to your use case.

| Category | Items (6 each) |

|---|---|

| Interoperability | MCP client/server; A2A federation; BYO‑model; secure registry; zero‑copy data; SDK coverage |

| Observability | OTel spans; prompt/step logs; evals; error budgets; replay harness; redaction |

| Security | OPA policies; agent identity; scoped secrets; tool sandbox; jailbreak defenses; SBOM/ABOM |

| Governance | ISO 42001; ISO 42005 AIIA; DPIA hooks; human approvals; model cards; risk register |

| Compliance | EU AI Act mapping; data residency; access logs; retention; consent; vendor DPAs |

| FinOps | Per‑step cost; budgets; model routing; cache; usage caps; monthly reports |

| Reliability | Shadow; canary; rollback; deterministic tools; retry/backoff; chaos tests |

| Productivity | Workspace add‑ins; Slack/Gmail; ticketing; calendars; file systems; mobile |

| E‑commerce | Shopify/WooCommerce apps; catalog sync; OMS hooks; returns; PDP copy; promos |

| Roadmap/vendor | Public roadmap; SLA; pricing transparency; support tiers; references; exit plan |

Sample scoring matrix

| Platform | Interoperability | Observability | Security | FinOps | Total (out of 120) |

|---|---|---|---|---|---|

| Agent 365 | __ / 12 | __ / 12 | __ / 12 | __ / 12 | __ / 120 |

| Agentforce 360 | __ / 12 | __ / 12 | __ / 12 | __ / 12 | __ / 120 |

| Antigravity (Gemini 3) | __ / 12 | __ / 12 | __ / 12 | __ / 12 | __ / 120 |

| OpenAI AgentKit | __ / 12 | __ / 12 | __ / 12 | __ / 12 | __ / 120 |

Tip: Keep raw notes for each score with links to docs, security questionnaires, and pilot results.

Red flags to watch

- Closed integrations only: No MCP/A2A path or vendor‑neutral adapters. citeturn0search1turn3news14

- Opaque pricing: No per‑step cost view or budget guardrails.

- Weak observability: No OpenTelemetry spans for tool calls or chain‑of‑thought disclosure controls. citeturn3search4

- Compliance shrug: No clear ISO 42001 posture or EU AI Act mapping by system type/timeline. citeturn2search6turn2search1

Go deeper: implement the foundations

- Build an Agent Registry for MCP/A2A and Agent 365 — identity, policy, secrets.

- Ship Agent CI/CD in 7 Days — shadow, canary, kill switches.

- Ship an Agent Firewall — prompt‑injection defenses and approvals.

- Agent Reliability Lab — evals, tracing, SLOs.

- Cut Agent Spend by 20–40% — routing, prompt diet, KPIs.

- Agent Control Plane for 2026 — unify platforms with MCP + OTel.

What we’re seeing in the market

Agent management is becoming its own category (Agent 365), Salesforce is positioning for end‑to‑end orchestration (Agentforce 360), and Google’s developer‑first stack (Antigravity + Gemini 3) emphasizes agentic development workflows and artifacts. OpenAI’s AgentKit pushes build‑and‑eval velocity. citeturn1news12turn4search2turn4news12turn0search0

Next steps

- Run a 2‑week pilot with two platforms using the scoring matrix.

- Instrument pilots with OpenTelemetry to track task success and cost per outcome. citeturn3search4

- Review governance with ISO 42001/42005 and map EU AI Act class and timing. citeturn2search6turn2search5turn2search1

- Decide on a control plane pattern (central registry + policies) before scaling.

Call to action

Want a tailored RFP and a two‑week pilot plan? Subscribe and reach out—our team at HireNinja can help you stand up an agent‑ready stack with MCP/OTel guardrails in days.

Leave a comment