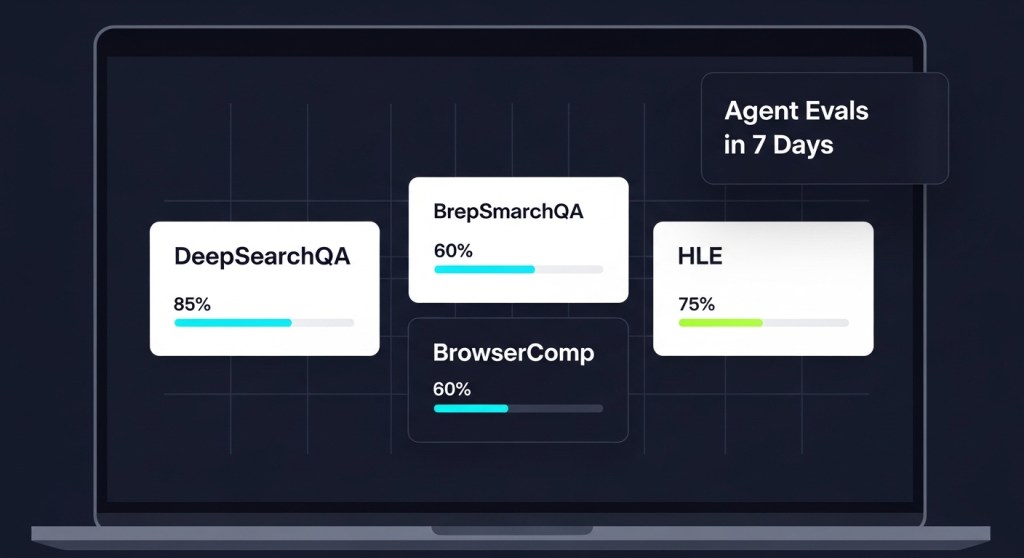

Ship Agent Evals in 7 Days: DeepSearchQA, BrowserComp, and Humanity’s Last Exam

It’s been a pivotal week for agentic AI. On December 11, 2025, Google unveiled a reimagined Deep Research agent and new benchmarks like DeepSearchQA, while OpenAI launched GPT‑5.2 the same day. Two days earlier, OpenAI, Anthropic, and Block formed the Agentic AI Foundation (AAIF) under the Linux Foundation to align on open standards such as MCP and Agents.md. Translation: your 2026 roadmap now depends on how well you can evaluate and govern agents—fast.

This guide gives startup founders and e‑commerce operators a simple, reliable, 7‑day plan to stand up agent evaluations using three benchmark styles now making headlines: DeepSearchQA (multi‑step research), BrowserComp (browser tool‑use), and Humanity’s Last Exam (general knowledge under pressure). You’ll also wire in guardrails, ship a weekly regression suite, and define SLAs your board can understand.

Why agent evals are different from model evals

- They’re task‑centric. You’re evaluating systems (LLM + tools + policies + data) on outcomes, not just model scores.

- Failures are compounding. A single hallucinated step can poison a 20‑step workflow.

- Security matters. As Trend Micro warns (rise of “vibe crime”), attackers are already chaining agents. Your evals must include misuse and abuse scenarios—not just happy paths.

What to measure (and the thresholds to start with)

- Task success rate (TSR): % of tasks completed exactly as specified. Target ≥ 85% for production candidates.

- Hallucination rate: % of runs with fabricated facts or unsupported claims. Target ≤ 2% on research tasks; ≤ 1% for finance/health.

- Unsafe action rate: % of attempts blocked by policy/guardrails. Trend downward over time.

- Average steps-to-success: Proxy for latency and reliability. Ratchet down weekly.

- Human‑escalation rate: % of runs requiring human help. Target ≤ 10% for support flows; stricter for payments or refunds.

- Cost per resolved task: Tokens + API + infra. Compare to human benchmark.

The 7‑day rollout

Day 1 — Pick 10 critical tasks and codify success

Choose the 10 workflows that move revenue or mitigate risk. Examples: WISMO deflection, order lookup, refund policy triage, competitor scan, monthly investor brief. For each, write a one‑page test card: inputs, expected artifacts, allowed tools, and verifiable acceptance criteria (links, numbers, or database writes).

Day 2 — Instrument your agents

Add structured logs: trace_id, session_id, tool calls, prompts, responses, cost, and policy decisions. Capture final artifacts (docs, tickets, emails). If you’re using MCP connectors, log tool schemas and errors. This enables replay and rewind when things go wrong.

Day 3 — Build your “golden” eval set

Convert 50–100 real tickets, emails, and briefs into labelled tasks across three buckets:

- DeepSearchQA‑style research (e.g., “Summarize last quarter’s returns spike and cite sources”).

- BrowserComp‑style web workflows (e.g., “Find three competitor bundle deals and capture screenshots + prices”).

- HLE‑style knowledge checks or domain quizzes (policy, compliance, catalog rules).

For each task, store an answer key or scorer that checks facts, URLs, and required fields.

Day 4 — Establish baselines across two frontier models

Run the same suite on your current model plus one alternative (e.g., GPT‑5.2 vs. Gemini 3 Pro / Deep Research). Keep prompts and tools constant; only swap the model. Record TSR, hallucinations, steps, cost, and time. Save ranked examples of both great and bad runs for training and team reviews.

Day 5 — Add guardrails and “agent firewalls”

Wire policy checks before risky actions: purchases, refunds, account changes, sends, deletes. Block unsafe tool calls; require approvals; add content filters. Re‑run the suite and confirm TSR stays high while unsafe action rate drops. Document playbooks for incident response and rollbacks.

Day 6 — Automate weekly regressions

Schedule your eval suite nightly or weekly. Track trendlines on a small dashboard: TSR, cost per task, escalations, and latency. Gate deployments on minimum thresholds. Add 5 new real‑world tasks every week to prevent overfitting.

Day 7 — Publish SLAs and pilot with one team

Turn your metrics into business statements: “WISMO deflection ≥ 60% with CSAT ≥ 4.4,” “Refund triage accuracy ≥ 95% with human approval,” “Research briefs cite ≥ 3 sources with 0% hallucinations.” Pilot with one e‑commerce brand or one internal function; expand once you see two consecutive green weeks.

Template: your minimal eval harness

- Task schema: JSON describing inputs, allowed tools, scorer type, and acceptance criteria.

- Runner: Calls your agent with the task; captures traces, artifacts, and costs.

- Scorers: Exact‑match (IDs, totals), reference checks (citations, URLs), and heuristic graders (structure, tone). For research, require verifiable citations.

- Reports: Per‑task and aggregate CSV/HTML with links to replays.

- Policy hooks: Before/after tool calls to enforce approvals and blocklists.

Real examples to start with

- E‑commerce support: 20 WISMO tickets + 10 returns triage + 10 product Q&A using your catalog and order API.

- Founder research: 10 competitive scans + 5 vendor due‑diligence briefs with citations and saved PDFs.

- Finance ops: 10 revenue‑reconciliation checks against your data warehouse stub.

Governance and standards you can adopt now

Use AAIF building blocks—MCP for tool connectivity and Agents.md to publish rules your agents must honor. Keep an audit trail of prompts, tools, and decisions for every task run. Your legal team will thank you.

Security note: include abuse tests

Add “red team” items to your suite: prompt‑injection attempts, data exfiltration, and risky purchase flows. Track blocked vs. allowed. With agentic cyber‑threats rising, this isn’t optional.

Recommended reading from HireNinja

- Google’s Deep Research Agent and OpenAI GPT‑5.2: A 7‑Day Founder Plan

- Agent Firewalls Are Here: Lock Down Your AI Agents

- Open Standards for AI Agents (AAIF): Why It Matters

- 10 Agent Automations E‑commerce Stores Can Ship in 72 Hours

- Agent App Stores: Package, Price, and Distribute Your Agents

External context

- Google’s upgraded Deep Research agent and new benchmarks landed on December 11, 2025 (TechCrunch).

- AAIF launched December 9, 2025 to standardize agent tooling like MCP and Agents.md (WIRED).

- Security teams are flagging agent‑enabled cybercrime (“vibe crime”) and urging stronger defenses (Trend Micro via ITPro).

Bring it home

If you can baseline two models, enforce guardrails, and automate weekly regressions, you’ll be ready for agent app stores, enterprise catalogs, and stricter buyer scrutiny in 2026. Start with 10 tasks. Measure relentlessly. Ship small improvements every week.

Get help in hours, not weeks

Want a plug‑and‑play eval harness, prebuilt scorer templates, and policy hooks? HireNinja can set up your first suite and train your team. Book a free eval audit today and be production‑ready next week.

Leave a comment