Quick plan for this article

- Scan the landscape: what changed this week (Agent 365, Gemini 3, Antigravity, AgentKit Evals) and why it matters.

- Define agent SLOs and KPIs founders can defend in a board meeting.

- Instrument tracing with OpenTelemetry in under a day (no vendor lock‑in).

- Stand up E2E evals using AgentKit’s Evals for Agents and trace grading.

- Wire dashboards, red‑team tests, and CI/CD quality gates.

- Ship in 7 days with a repeatable checklist and benchmarks.

Why now: the agent floor just moved

Microsoft unveiled Agent 365 to register, govern, and monitor fleets of enterprise agents—think RBAC, access, and oversight for bots at scale. That’s a signal: execs expect agent SLAs and audits, not demos. citeturn0news12

Google launched Gemini 3 and Antigravity, an agent‑first IDE that produces verifiable “Artifacts” from agent actions. This raises the bar on observability and provenance for agentic dev. citeturn1search3turn1news12

OpenAI’s AgentKit added Evals for Agents with datasets, trace grading, and automated prompt optimization—exactly what teams need to move from experiments to production reliability. citeturn3search2turn0search0

Interoperability is accelerating (Google’s A2A, Microsoft adoption), and agentic checkout standards (Google’s AP2, OpenAI/Stripe’s ACP) are converging. If your agents touch payments or customer data, you’ll need consistent SLOs, evals, and traces—yesterday. citeturn0search7turn1search4turn2search0turn2search1

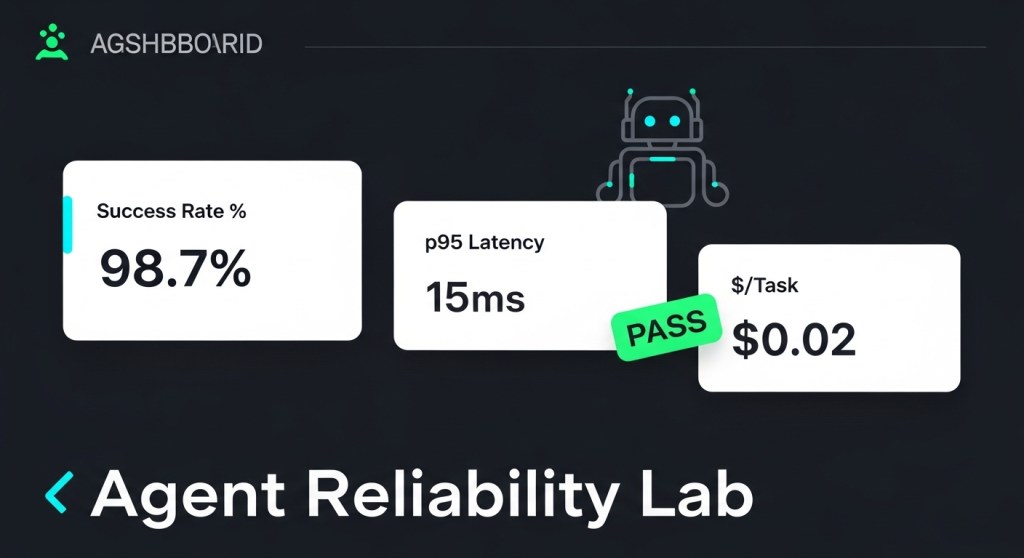

Outcome first: SLOs and KPIs every agent team should track

Before tooling, set targets you can defend with investors and compliance:

- Task Success Rate (TSR): % of tasks meeting acceptance criteria without human rescue (target: ≥92%).

- p95 Latency: end‑to‑end time per task (target: <8s for support/search; <20s for multi‑step ops).

- Cost per Task: all‑in model + tools + retries (target: within budget envelope; see our cost‑control playbook).

- Tool‑Call Accuracy: correct tool, correct params (target: ≥97%).

- Deferral Rate: % of cases escalated to bigger model/human (target: stable near 5–10% with quality maintained).

- Security Incidents: prompt‑injection/data‑exfil events per 1k tasks (target: 0; alert on any occurrence).

These map cleanly to NIST AI RMF’s guideposts on measurement, risk tolerance, and human‑AI oversight—useful when you brief legal or GRC. citeturn3search0turn3search1turn3search3

Day‑by‑day: build your Agent Reliability Lab in 7 days

Day 1 — Define scope and acceptance tests

- Pick 2–3 critical workflows (e.g., support triage, SEO brief, returns automation). Document inputs/outputs, guardrails, and success criteria.

- Create a failure taxonomy (hallucination, wrong tool, unsafe action, cost spike, timeout).

- Draft target SLOs (above) and an error budget (e.g., 5% monthly TSR error) to gate releases.

Day 2 — Instrument OpenTelemetry traces

- Add OTEL tracing to your agent runtime. If you use LlamaIndex or OpenLLMetry, you can be up in minutes; both emit standard OTEL that flows to Elastic, Grafana, SigNoz, etc. citeturn5search6turn5search0turn5search2turn5search5

- Capture spans for: task, sub‑task, LLM call, tool call (with redacted params), A2A handoff, and external API I/O. Use emerging gen‑AI semantic conventions as a guide. citeturn4search0turn4search4

Minimal Python sketch (illustrative):

from opentelemetry import trace

tracer = trace.get_tracer("agents.reliability")

with tracer.start_as_current_span("task.support_refund", attributes={"channel": "email", "priority":"high"}):

with tracer.start_as_current_span("llm.plan"):

plan = llm.plan(ticket)

with tracer.start_as_current_span("tool.fetch_order", attributes={"order_id": obfuscated_id}):

order = oms.get(order_id)

with tracer.start_as_current_span("llm.decide_refund"):

decision = llm.decide(order, policy)

Day 3 — Stand up E2E evals with AgentKit

- Use AgentKit Evals for Agents to build datasets and trace grading for your workflows. Start with 30–50 golden cases per workflow; add hard negatives weekly. citeturn3search2

- Score TSR, Tool‑Call Accuracy, p95 Latency, and Cost/Task; auto‑optimize prompts with the built‑in prompt optimizer for low‑performing cases. citeturn3search2

Day 4 — Add security and red‑team tests

- Simulate prompt injection and data exfiltration attempts; verify that the agent refuses unsafe actions and logs mitigations in traces. Research like AgentSight shows OS‑level boundary tracing can catch malicious behavior outside your app code—useful for high‑risk flows. citeturn5academia13

- Record red‑team cases in your eval dataset; failures block promotion.

Day 5 — Dashboards and alerts

- Publish a single reliability dashboard: TSR, p95, Deferral Rate, Cost/Task, incidents by type. Feed from your OTEL backend (Elastic/SigNoz/Grafana). citeturn5search2turn5search5

- Alert on SLO breaches and semantic-cascade drift signals (e.g., rising deferrals). Ensemble‑agreement methods can reduce cost while keeping quality. citeturn4academia13

Day 6 — CI/CD gates + governance

- Wire evals to CI: PRs or model swaps must meet SLOs on golden sets. Fail fast if cost or p95 regress >10%.

- Connect to your agent registry + RBAC and identity to log who/what changed and why—helps with Agent 365‑style audits. citeturn0news12

Day 7 — Ship, review, and set a 30‑day hardening plan

- Run a 24‑hour canary with live traffic. Compare canary vs control on TSR, p95, Cost/Task; roll forward only if within error budget.

- Publish a one‑pager to leadership with current SLOs, error budget burn, and top 3 fixes for the next sprint.

A2A/MCP specifics: what to trace and test

- Handoffs: record A2A intents, recipient, and outcome; assert the receiving agent’s first action is valid (e.g., safe tool call). Industry movement toward A2A makes this observable handoff core. citeturn0search7

- Checkout: if you’re piloting AP2/ACP, trace mandate issuance/validation, tokenization, and completion webhooks; add evals for amount caps, merchant of record, and user confirmation. citeturn1search4turn2search1

Pair this with our PCI/SCA mapping and agentic checkout guide for compliance guardrails. citeturn2search0

What “good” looks like in week 2

- TSR ≥92% on golden sets; p95 latency within 10% of target.

- Cost/Task down 15–25% via prompt diet, routing, and deferrals—see our 14‑day cost playbook.

- Zero unresolved security incidents; all red‑team prompts logged and mitigated.

- Dashboards live; CI gates blocking regressions; change controls enforced via our registry/RBAC.

Tools you can use without lock‑in

- Tracing: OpenTelemetry + OpenLLMetry or LlamaIndex OTEL; send to Elastic, SigNoz, or Grafana Tempo. citeturn5search0turn5search6turn5search2turn5search5

- Evals: AgentKit Evals for Agents (datasets, trace grading, prompt optimizer). citeturn3search2

- Advanced: boundary tracing (AgentSight) for high‑risk agents. citeturn5academia13

Internal resources

- A2A interoperability (AgentKit ↔ Agentforce ↔ MCP)

- Agent identity (AgentCards, AP2, OAuth/OIDC)

- Agent registry & RBAC

The takeaway

The agent platforms are maturing fast, but reliability is on you. In one week you can instrument traces, stand up evals, set SLOs, and wire CI gates—so when leadership asks “Are these agents safe, fast, and cost‑effective?”, you’ll have the dashboard—and the receipts—to answer yes.

Call to action: Need help implementing this 7‑day lab or running a bake‑off across AgentKit, Agentforce, and Antigravity? Subscribe for weekly playbooks or contact HireNinja for a guided pilot.

Leave a comment