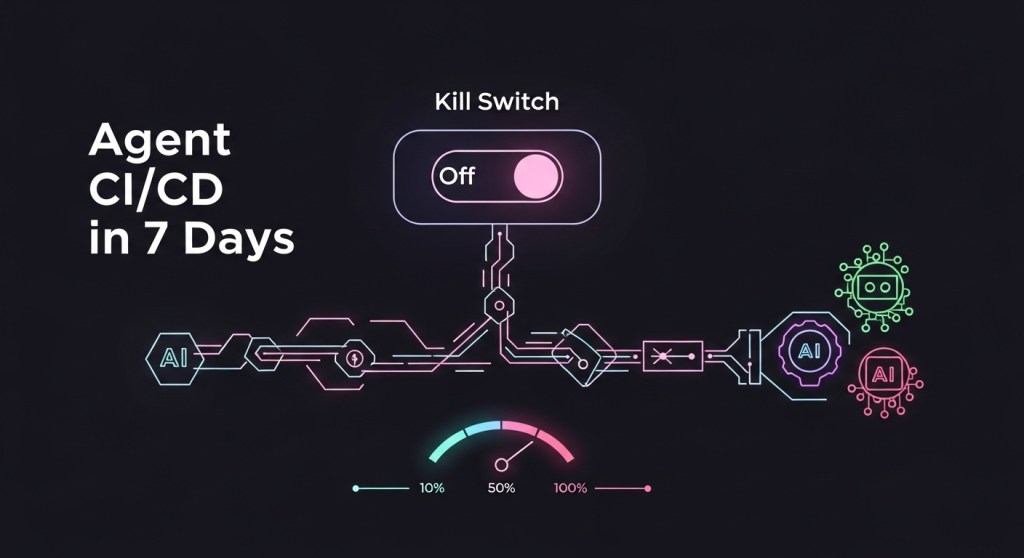

Summary: Use this 7‑day playbook to stand up Agent CI/CD for Model Context Protocol (MCP) and A2A (agent‑to‑agent) workloads—complete with shadow testing, canary releases, human approvals, instant kill switches, and OpenTelemetry‑backed KPIs.

Why now

Enterprises are moving from a few pilots to fleets of agents. Microsoft’s new Agent 365 frames the need for registries, policy, and oversight at scale, signaling that bot fleets will be managed much like employees. Source. Meanwhile, OpenAI’s MCP and connectors make it easier for agents to act in production systems, which raises the bar for safe deployment and rapid rollback. Docs · Help Center.

If you already shipped the building blocks—registry & RBAC, reliability lab with Evals + OTel, and an agent firewall—this is the missing layer that lets you deploy continuously without fear.

What you’ll build in 7 days

- Shadow testing for every agent change; no user impact.

- Canary releases with automated promotion/rollback based on KPIs.

- Human approvals at key risk gates.

- Instant kill switches via feature flags and config.

- OpenTelemetry-instrumented traces and Gen‑AI metrics for spend, quality, and latency.

Pre‑reqs

- MCP‑ready agent stack (OpenAI Agents SDK or compatible). Guide.

- Registry/RBAC (Agent 365 or your own). See our 7‑day registry guide.

- Agent firewall/policies (prompt‑injection, tool scoping). See agent firewall.

- Observability backend with OTel Collector.

Day‑by‑day plan

Day 1 — Baseline your pipelines and KPIs

Create a Git‑based pipeline per agent with environments: shadow, canary, prod. Define SLOs and promotion criteria (examples):

- Cost per successful task:

gen_ai.client.token.usage÷task_success≤ target. OTel Gen‑AI metrics. - Task success rate ≥ 95% on eval set; escalation rate ≤ 3%.

- Median latency < Xs; P95 < Ys.

Emit standardized attributes using OTel semantic conventions so dashboards stay consistent across agents. OTel SemConv.

Day 2 — Add shadow testing to every PR

Wire your pipeline to deploy the new agent version in shadow alongside the current production agent. Shadow receives mirrored traffic or a replayed eval set; it can only observe and log.

- For HTTP/K8s apps, use Argo Rollouts Experiment with baseline/canary templates to run A/B shadows safely. Docs.

- For browser agents, keep actions read‑only during shadow to avoid unintended writes.

Gate merge on evals + tracing checks from your Agent Reliability Lab.

Day 3 — Introduce canary releases with automated analysis

Promote from shadow → canary with progressive traffic splitting and automated analysis:

- Kubernetes: Argo Rollouts with NGINX/Istio/Consul traffic shaping and AnalysisTemplates that query your KPIs; auto‑promote or auto‑rollback. Overview · NGINX.

- Canary design: follow SRE guidance—one canary at a time, short duration if you deploy often, and representative users. Google SRE canarying.

Day 4 — Add human approvals where risk is high

Not every change needs manual review, but the ones that touch money, identity, or PII do. Use MCP tool approvals in your agent runtime or your CI to require a human to approve risky connectors/actions before promotion. Agents SDK (approvals).

Day 5 — Ship kill switches and load shedding

Feature flags give you a single click rollback for a misbehaving agent or tool. Create:

- Service kill switch: disable an agent or a specific tool (e.g., “checkout.write”).

- Degrade modes: turn off expensive behaviors (e.g., web‑browse) under load.

- Ownership & TTL: who can flip, and when the flag is removed.

See operational flag practices (naming, RBAC, relay proxy). Guide · Best practices.

Day 6 — Wire everything into observability

Add spans/metrics that tie business outcomes to release steps:

agent.release.stage: shadow | canary | prodagent.id,agent.version,mcp.server,tool.namegen_ai.client.token.usage,task_success,task_escalated

Alert on budget KPIs (see our cost‑control playbook) and security signals (see firewall).

Day 7 — Launch checklist

- Shadow: green on evals + traces for 24h or N requests.

- Canary: 10% → 25% → 50% with auto analysis; no SLO breach.

- Approvals: risky tools allowed only after review.

- Kill switches: tested in staging; owners on‑call.

- Runbooks: rollback, disable tool, degrade mode.

Architecture reference

Flow: Git push → CI builds agent → deploy to shadow (no writes) → auto evals + OTel checks → promote to canary with Argo Rollouts analysis → auto‑promote/rollback → prod. Approvals and flags can interrupt at any point.

Windows & MCP: If you’re on Windows fleets, the new MCP discovery/registry (ODR) improves visibility, containment, and audit across agents. Microsoft Learn.

Worked example: e‑commerce checkout helper

Use case: an agent assists with returns and replacements. Risks: payment actions, PII, fraud.

- Shadow: replay 5k anonymized sessions; block write tools.

- Canary: route 10% of post‑purchase chat flows; require human approval for payment updates.

- Kill switch: flags for “refunds.write” and “address.change”.

- KPIs: escalation ≤ 3%, refund errors ≤ 0.5%, median handle time −15% vs control.

If KPIs hold across two canary steps, auto‑promote; otherwise rollback and flip the tool‑level kill switch. This mirrors how modern platforms are bringing agents into frontline CX (see recent funding momentum in agent CX platforms). Reuters.

Implementation notes

- Argo Rollouts: progressive traffic, automated analysis, and experiments for shadow trials. Docs.

- Feature flags: treat kill switches as short‑term operational flags with owners + TTL. Best practices.

- OTel: adopt gen‑AI metrics for token usage and tie them to release stages to calculate cost per success. Spec.

- MCP connectors: use require‑approval for write actions and restrict scopes. Guide.

Common pitfalls

- Long‑lived flags that rot and break months later—add TTLs and clean up. Guide.

- Multiple parallel canaries contaminating signals—run one at a time. SRE workbook.

- Unobserved browser agents—keep a strict shadow mode and add a firewall.

Where this fits in your stack

Pair this with our Agent 365 prep plan and Always‑On SEO Agent playbook to get an end‑to‑end AgentOps foundation.

TL;DR rollout template (K8s + Argo Rollouts)

# Pseudocode snippet for a canary with automated analysis

strategy:

canary:

steps:

- setWeight: 10

- pause: { duration: 5m }

- analysis:

templates:

- templateName: task-success-rate

- templateName: cost-per-success

- setWeight: 50

- pause: { duration: 10m }

- analysis:

templates:

- templateName: escalation-rate

trafficRouting:

nginx: {}

See official docs for full manifests and providers. Argo Rollouts.

Call to action: Need help implementing Agent CI/CD or audits for MCP/A2A? Subscribe for new playbooks, or talk to HireNinja about a 2‑week AgentOps jumpstart.

Leave a comment