Planned steps

- Scan recent competitor news to confirm what’s trending.

- Clarify audience and problems this guide solves.

- Map content gaps and pick a high‑value, searchable topic.

- Do lightweight SEO research and define keywords.

- Draft a 7‑day, step‑by‑step implementation playbook with links and code.

- Include internal links to related HireNinja posts and external sources.

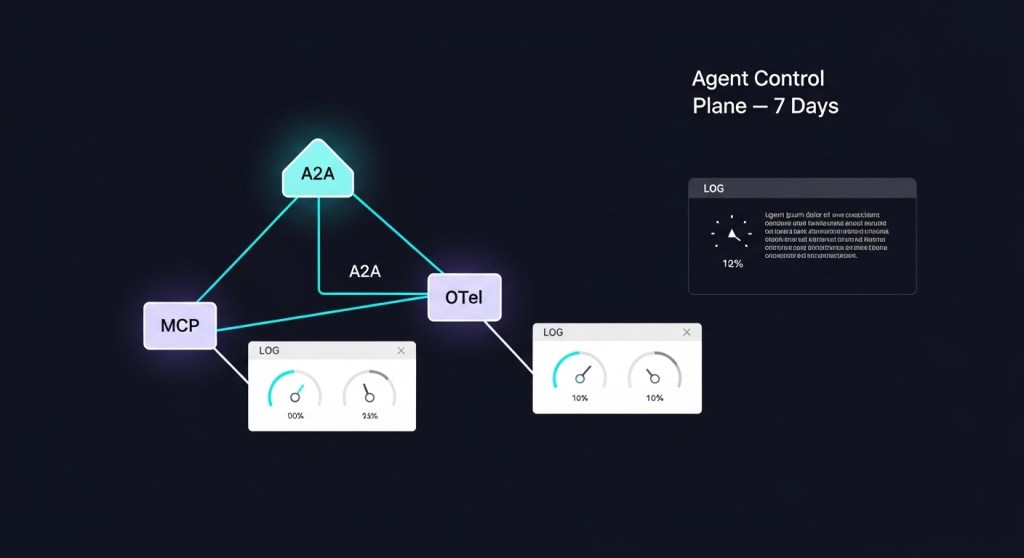

Build an Internal AI Agent Control Plane in 7 Days (MCP + A2A + OpenTelemetry)

In the last few weeks, the “agent control plane” idea went mainstream. Microsoft introduced Agent 365 to centrally manage AI agents like a workforce, with registries, access controls, and live telemetry. (Wired) (The Verge) Salesforce updated its agent platform with Agentforce 360, and OpenAI launched AgentKit for building and operating agents. (TechCrunch) (TechCrunch)

If you don’t live entirely in one vendor’s stack, here’s how to ship a vendor‑agnostic control plane in seven days using three open pieces: MCP for secure tool access and server integrations, A2A for cross‑agent collaboration, and OpenTelemetry for end‑to‑end observability. We’ll add SLOs, evals, and policy so you can scale safely.

What you’ll build

- Agent Registry with versioned metadata (owners, scopes, capabilities, risk). Uses A2A-style Agent Cards for discovery and wiring.

- Policy & Access with least‑privilege roles and environment scoping, aligned to MCP’s OAuth 2.1 updates. (MCP changelog)

- Observability using OpenTelemetry Gen‑AI semantic conventions: latency, token usage, success rate, cost per task. (OpenTelemetry)

- Evals & Red Team with OpenAI’s agent evals for regression tests and guardrail checks. (OpenAI docs)

Who this is for

Startup founders, e‑commerce operators, and platform teams who want to run multiple agents (support, finance ops, merchandising, growth) safely—without locking into one vendor or waiting for long enterprise rollouts.

Day‑by‑day 7‑Day Plan

Day 1 — Scope, Risks, and SLOs

- Pick two high‑impact tasks (e.g., support triage, checkout recovery). Define SLOs: time‑to‑first‑token, time‑to‑outcome, success rate, cost per task.

- Draft a simple risk register (PII exposure, prompt injection, tool abuse). Tie each risk to a control (rate limits, content scanning, human‑in‑the‑loop).

- Read: our 7‑day SLO playbook to define metrics and alerts. (HireNinja)

Day 2 — Instrumentation with OpenTelemetry

- Deploy the OpenTelemetry Collector and your preferred backend (Grafana, Datadog, New Relic).

- Instrument LLM/tool calls with Gen‑AI metrics (token usage, errors) and traces for tool invocations.

# Example attributes to record (pseudo-code)

span.set_attribute("gen_ai.request.model", model)

span.set_attribute("gen_ai.client.token.usage.input", input_tokens)

span.set_attribute("gen_ai.client.token.usage.output", output_tokens)

span.set_attribute("ai.agent.task.success", success)

span.set_attribute("ai.agent.task.cost.usd", cost)

Reference: OpenTelemetry Gen‑AI metrics. (spec)

Day 3 — MCP servers + OAuth 2.1

- Stand up one MCP server for a safe tool (e.g., product catalog or ticket search). Use OAuth as per the latest spec so agents connect with scoped tokens.

- Add tool annotations (read‑only vs destructive), and validate responses to reduce prompt‑injection blast radius.

Notes: MCP’s 2025 updates strengthened auth and added structured tool output—ideal for governed tool access. (spec changelog) (GitHub MCP docs)

Day 4 — Agent Registry with A2A Agent Cards

- Create a Git repo folder

/agents/with one Agent Card (JSON) per agent: owner, purpose, capabilities, endpoints, scopes, SLOs. - Expose cards over HTTPS and index them in a simple registry page for discovery.

{

"name": "checkout-recovery",

"version": "0.2.1",

"owner": "growth@acme.com",

"capabilities": ["cart-lookup", "discount-offer"],

"a2a": {"endpoint": "https://agents.acme.com/checkout"},

"policy": {"pii": "masked", "env": "staging"},

"slos": {"tft_ms": 1500, "success_rate": 0.85}

}

Background: A2A gives agents a common language to collaborate across vendors and clouds, now adopted across the industry (Google announced A2A; Microsoft committed support). (Google) (TechCrunch)

Day 5 — Policy, RBAC, and Environment Boundaries

- Map identities (service principals or app registrations) to agents. Issue short‑lived tokens with constrained scopes.

- Define destructive‑action review (refunds, bulk emails) requiring human approval or sandbox execution.

- Block production data access in dev/staging via policy guardrails at the MCP gateway.

Day 6 — Evals and Red‑Team

- Use OpenAI Agent Evals to create regression tests for your top workflows (e.g., refund eligibility, tone compliance). (docs)

- Run a 48‑hour red‑team sprint against critical agents. (HireNinja guide) Then apply fixes via policy and tool scopes. For long‑term hardening, follow our 30‑day plan. (HireNinja)

Day 7 — Go‑Live, Dashboards, and Budgets

- Publish dashboards for SLOs, errors, and costs; wire alerts to Slack/Email.

- Set FinOps guardrails: daily spend caps, model routing for cheaper variants off‑peak. (HireNinja FinOps)

- Run a canary release for 10% of traffic; expand if SLOs hold for 72 hours.

When to lean on vendors vs build on standards

- Microsoft Agent 365: Centralized enterprise control with Entra integration and early‑access telemetry—great if you’re all‑in on Microsoft. (Wired) (The Verge)

- Salesforce Agentforce 360: Deep Salesforce/Slack workflows and agent prompting features (Agent Script). (TechCrunch)

- OpenAI AgentKit: Fast path to build, eval, and ship agents on OpenAI’s platform with a connector registry. (TechCrunch)

- Open Standards (MCP + A2A + OTel): Best for portability, multi‑cloud, and avoiding lock‑in while keeping strong governance. (MCP) (OTel) (A2A)

Common pitfalls and quick fixes

- Ambiguous ownership: Each Agent Card must have a named owner and on‑call channel.

- Opaque costs: Track cost per task in traces; auto‑route to cheaper models when SLO headroom allows.

- Prompt injection: Mark tools as read‑only vs destructive, validate tool output, and sandbox risky actions via MCP gateways.

- Unclear success criteria: Define outcome‑based SLOs, not just latency.

Keep going

- Add long‑term memory with secure RAG and audits. (HireNinja)

- Harden your browser agents vs API automations with a decision framework. (HireNinja)

- Red‑team support flows before peak season. (HireNinja)

Call to action

Want a turnkey boost? Subscribe for weekly agent ops playbooks—then book a 30‑minute session with HireNinja to adapt this control‑plane plan to your stack.

Leave a comment